Newly Launched

LLM Prompt Engineering Certification Course Online

LLM Prompt Engineering Certification Course Online

Have queries? Ask us+1 833 429 8868 (Toll Free)

2148 Learners4.6 550 Ratings

View Course Preview Video

Live Online Classes starting on 30th Aug 2025

Why Choose Edureka?

Google Reviews

G2 Reviews

Sitejabber Reviews

Instructor-led Prompt Enginerring with LLM live online Training Schedule

Flexible batches for you

18,199

Starts at 6,067 / monthWith No Cost EMI Know more

Why enroll for Prompt Engineering with LLM Training Course?

Prompt Engineering with LLM Course Benefits

The global LLM market is anticipated to grow at a CAGR of 35.92% from 2025 to 2033, with 80% of enterprises adopting LLMs and prompt engineering for seamless automation and content creation. As businesses embrace these technologies, demand for experts in LLM optimization and prompt design is soaring. Our course empowers you with cutting-edge expertise to thrive in this fast-growing field at the forefront of AI innovation.

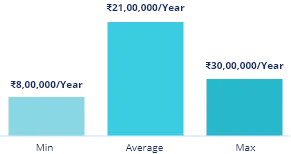

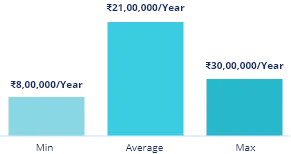

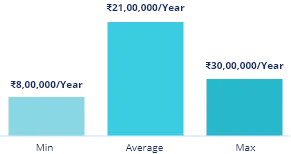

Annual Salary

Hiring Companies

Annual Salary

Hiring Companies

Annual Salary

Hiring Companies

Why Prompt Engineering with LLM Training Course from edureka

Live Interactive Learning

- World-Class Instructors

- Expert-Led Mentoring Sessions

- Instant doubt clearing

Lifetime Access

- Course Access Never Expires

- Free Access to Future Updates

- Unlimited Access to Course Content

24x7 Support

- One-On-One Learning Assistance

- Help Desk Support

- Resolve Doubts in Real-time

Hands-On Project Based Learning

- Industry-Relevant Projects

- Course Demo Dataset & Files

- Quizzes & Assignments

Industry Recognised Certification

- Edureka Training Certificate

- Graded Performance Certificate

- Certificate of Completion

Like what you hear from our learners?

Take the first step!

About your Prompt Engineering with LLM Training Course

Skills Covered

Tools Covered

Curriculum

Curriculum Designed by Experts

Generative AI Essentials

14 Topics

Topics

- What is Generative AI?

- Generative AI Evolution

- Differentiating Generative AI from Discriminative AI

- Types of Generative AI

- Generative AI Core Concepts

- LLM Modelling Steps

- Transformer Models: BERT, GPT, T5

- Training Process of an LLM Model like ChatGPT

- The Generative AI development lifecycle

- Overview of Proprietary and Open Source LLMs

- Overview of Popular Generative AI Tools and Platforms

- Ethical considerations in Generative AI

- Bias in Generative AI outputs

- Safety and Responsible AI practices

![Hands On Experience skill]()

- Creating a Small Transformer using PyTorch

- Explore OpenAI Playground to test text generation

![skill you will learn skill]()

- Generative AI Fundamentals

- Transformer Architecture

- LLM Training Process

- Responsible AI Practices

Prompt Engineering Essentials

10 Topics

Topics

- Introduction to Prompt Engineering

- Structure and Elements of Prompts

- Zero-shot Prompting

- One-shot Prompting

- Few-shot Prompting

- Instruction Tuning Basics

- Prompt Testing and Evaluation

- Prompt Pitfalls and Debugging

- Prompts for Different NLP Tasks (Q&A, Summarization, Classification)

- Understanding Model Behavior with Prompt Variations

![Hands On Experience skill]()

- Craft effective zero-shot, one-shot, and few-shot prompts

- Write prompts for different NLP tasks: Q&A, summarization, classification

- Debug poorly structured prompts through iterative testing

- Analyze prompt performance using prompt injection examples

![skill you will learn skill]()

- Prompt Structuring

- Prompt Tuning

- Task-Specific Prompting

- Model Behavior Analysis

Advanced Prompting Techniques

14 Topics

Topics

- Chain-of-Thought (CoT) Prompting

- Tree-of-Thought (ToT) Prompting

- Self-Consistency Prompting

- Generated Knowledge Prompting

- Step-back Prompting

- Least-to-Most Prompting

- Adversarial Prompting & Prompt Injection

- Defenses against Prompt Injection

- Auto-prompting techniques

- Semantic Search for Prompt Selection

- Context Window Optimization strategies

- Dealing with ambiguous prompts

- Human-in-the-loop prompt refinement

- Prompt testing and validation methodologies

![Hands On Experience skill]()

- Implementing CoT and ToT

- Testing Prompt Robustness

- Auto-Prompt Generation

- Human-in-the-loop Refinement

![skill you will learn skill]()

- Multi-step Prompting

- Prompt Injection Defense

- Semantic Prompt Optimization

- Prompt Evaluation Techniques

Working with LLM APIs and SDKs

10 Topics

Topics

- LLM Landscape: OpenAI, Anthropic, Gemini, Mistral API, LLaMA

- Core Capabilities: Summarization, Q&A, Translation, Code Generation

- Key Configuration Parameters: Temperature, Top_P, Max_Tokens, Stop Sequences

- Inference Techniques: Sampling, Beam Search, Greedy Decoding

- Efficient Use of Tokens and Context Window

- Calling Tools

- Functions With LLMs

- Deployment Considerations for Open-Source LLMs (Local, Cloud, Fine-Tuning)

- Rate Limits, Retries, Logging

- Understanding Cost, Latency, and Performance and Calculating via Code

![Hands On Experience skill]()

- API Calls with OpenAI, Gemini, Anthropic

- Tuning Parameters for Text Generation

- Token Usage Optimization

![skill you will learn skill]()

- API Integration

- Parameter Tuning

- Inference Techniques

- Cost Optimization

Building LLM Apps with LangChain and LlamaIndex

9 Topics

Topics

- LangChain Overview

- LlamaIndex Overview

- Building With LangChain: Chains, Agents, Tools, Memory

- Understanding LangChain Expression Language (LCEL)

- Working With LlamaIndex: Document Ingestion, Index Building, Querying

- Integrating LangChain and LlamaIndex: Common Patterns

- Using External APIs and Tools as Agents

- Enhancing Reliability: Caching, Retries, Observability

- Debugging and Troubleshooting LLM Applications

![Hands On Experience skill]()

- Building Chains and Agents

- Indexing with LlamaIndex

- External API Integration

- Observability Implementation

![skill you will learn skill]()

- LangChain Workflows

- Document Indexing

- Tool Integration

Developing RAG Systems

14 Topics

Topics

- What is RAG and Why is it Important?

- Addressing LLM limitations with RAG

- The RAG Architecture: Retriever, Augmenter, Generator

- DocumentLoaders

- Embedding Models in RAG

- VectorStores as Retrievers in LangChain and in Llamaindex

- RetrievalQA Chain and its variants

- Customizing Prompts for RAG

- Advanced RAG Techniques: Re-ranking retrieved documents

- Query Transformations

- Hybrid Search

- Parent Document Retriever and Self-Querying Retriever

- Evaluating RAG Systems: Retrieval Metrics

- Evaluation Metrics for Generation

![Hands On Experience skill]()

- Build a RAG Pipeline

- Implement RetrievalQA With Custom Prompts

- Evaluate Retrieval and Generation Quality Using Standard Metrics

![skill you will learn skill]()

- AG Architecture Understanding

- Document Retrieval Techniques

- Prompt Customization

Vector Databases and Embedding in practice

16 Topics

Topics

- What are Text Embeddings?

- How LLMs and Embedding Models generate embeddings

- Semantic Similarity and Vector Space

- Introduction to Vector Databases

- Key features: Indexing, Metadata Filtering, CRUD operations

- ChromaDB: Local setup, Collections, Document and Embedding Storage

- Pinecone: Cloud-native, Indexes, Namespaces, and Metadata filtering

- Weaviate: Open-source, Vector-native, and Graph Capabilities

- Other Vector Databases: FAISS, Milvus, Qdrant

- Similarity Search Algorithms

- Building Search Pipelines End to End with an Example Code

- Vector Indexing techniques

- Data Modeling in Vector Databases

- Updating and Deleting Vectors

- Choosing the Right Embedding Model

- Evaluation of Retrieval quality from Vector Databases

![Hands On Experience skill]()

- Building a Search Pipeline

- Retrieval Evaluation

![skill you will learn skill]()

- Text Embedding Concepts

- Vector Database Usage

- Similarity Search

Building and Deploying End-to-End GenAI Applications

12 Topics

Topics

- Architecting LLM-Powered Applications

- Types of GenAI Apps: Chatbots, Copilots, Semantic Search / RAG Engines

- Design Patterns: In-Context Learning vs RAG vs Tool-Use Agents

- Stateless vs Stateful Agents

- Modular Components: Embeddings, VectorDB, LLM, UI

- Key Architectural Considerations: Latency, Cost, Privacy, Memory, Scalability

- Building GenAI APIs with FastAPI

- RESTful Endpoint Structure

- Async vs Sync, CORS, Rate Limiting, API Security

- Orchestration Tools: LangServe, Chainlit, Flowise

- Cloud Deployment: GCP

- Containerization and Environment Setup

![Hands On Experience skill]()

- Wrap LLM into FastAPI

- Deploy Chatbot using LangChain

- GCP Cloud Run Deployment

- Logging with LangSmith

![skill you will learn skill]()

- GenAI App Design

- REST API Development

- Cloud Deployment

Evaluating GenAI Applications and Enterprise Use Cases

12 Topics

Topics

- Evaluation Metrics: Faithfulness, Factuality, RAGAs, BLEU, ROUGE, MRR

- Human and Automated Evaluation Loops

- Logging, Tracing, and Observability Tools: LangSmith, PromptLayer, Arize

- Prompt and Output Versioning

- Chain Tracing and Failure Monitoring

- Real-Time Feedback Collection

- GenAI Use Cases: Customer Support, Legal, Healthcare, Retail, Finance

- Contract Summarization

- Legal Q&A Bots

- Invoice Parsing with RAG

- Product Search Applications

- Domain Adaptation Strategies

![Hands On Experience skill]()

- Calculate RAGAs metrics for retrieval faithfulness.

- Set up LangSmith for real-time feedback collection

![skill you will learn skill]()

- Evaluating and monitoring GenAI model performance

- Implementing effective observability and debugging workflows

Multimodal LLMs and Beyond

14 Topics

Topics

- Introduction to Multimodal LLMs (GPT-4V, LLaVA, Gemini)

- How multimodal models process different data types

- Use Cases: Image Captioning, Visual Q&A, Video Summarization

- Working with Vision-Language Models (VLMs): Image inputs, text outputs

- Image Loaders in LangChain/LlamaIndex

- Simple visual Q&A applications

- Audio Processing with LLMs: Speech-to-Text (ASR)

- Text-to-Speech (TTS) integration

- Video understanding with LLMs

- Challenges in Multimodal AI

- Ethical Considerations in Multimodal AI

- Agent Frameworks (AutoGPT, CrewAI, LangGraph, MetaGPT)

- ReAct and Plan-and-Act agent strategies

- Future Directions

![Hands On Experience skill]()

- Build visual Q&A pipelines

![skill you will learn skill]()

- Multimodal Understanding

- Vision-Language Processing

- Agent Frameworks

Bonus Module: Fine-tuning & PEFT (Self-paced)

12 Topics

Topics

- Introduction to LLMOps: Managing the ML Lifecycle for Large Language Models

- Prompt Versioning and Experiment Tracking

- Model Monitoring: Latency, Drift, Failures, and Groundedness

- Safety and Reliability Evaluation: Toxicity, Hallucination, Bias Detection

- Evaluation Frameworks Overview: RAGAS, TruLens, LangSmith

- RAG Evaluation with RAGAS: Precision, Recall, Faithfulness

- Observability in Production: Logs, Metrics, Tracing LLM Workflows

- Using LangSmith for Chain/Agent Tracing, Feedback, and Dataset Runs

- Integrating TruLens for Human + Automated Feedback Collection

- Inference Cost Estimation and Optimization Techniques

- Budgeting Strategies for Token Usage, API Calls, and Resource Allocation

- Production Best Practices: Deploying With Guardrails and Evaluation Loops

![Hands On Experience skill]()

- Fine-tune a small LLM using LoRA with the PEFT library on Google Colab

- Apply QLoRA to a quantized model using Hugging Face + Colab setup

- Implement adapter tuning on a pre-trained model for a classification task

- Compare output quality before and after finetuning using evaluation prompts

![skill you will learn skill]()

- Finetuning LLMs with LoRA, QLoRA, and Adapters

- Selecting optimal finetuning techniques for different scenarios

- Setting up and running parameter-efficient finetuning workflows using Hugging Face

Bonus Module: LLMOps and Evaluation (Self-paced)

12 Topics

Topics

- Introduction to Model Finetuning: When Prompt Engineering Isn’t Enough

- Overview of Parameter-Efficient Finetuning (PEFT)

- LoRA (Low-Rank Adaptation): Concept and Architecture

- QLoRA: Quantized LoRA for Finetuning Large Models Efficiently

- Adapter Tuning: Modular and Lightweight Finetuning

- Comparing Finetuning Techniques: Full vs. LoRA vs. QLoRA vs. Adapters

- Selecting the Right Finetuning Strategy Based on Task and Resources

- Introduction to Hugging Face Transformers and PEFT Library

- Setting Up a Finetuning Environment with Google Colab

- Preparing Custom Datasets for Instruction Tuning and Task Adaptation

- Monitoring Training Metrics and Evaluating Fine-tuned Models

- Use Cases: Domain Adaptation, Instruction Tuning, Sentiment Customization

![Hands On Experience skill]()

- Track and compare multiple prompt versions using LangSmith

- Implement a RAG evaluation pipeline using RAGAS on a custom QA system

- Monitor model behavior and safety using TruLens in a live demo

- Visualize cost and performance metrics from a deployed LLM API

![skill you will learn skill]()

- Setting up LLMOps pipelines for observability and evaluation

- Using RAGAS, TruLens, and LangSmith to assess model quality and safety

- Managing cost and performance trade-offs in production GenAI systems

Course Details

Course Overview and Key Features

This LLM Prompt Engineering Certification Course guides you through basic to advanced generative AI techniques, including prompt engineering, retrieval-augmented generation (RAG), and vector databases. You will gain practical skills to design and deploy cutting-edge GenAI applications using popular tools such as Python, PyTorch, LangChain, and OpenAI. The course also focuses on mastering LLM APIs, application architecture, and production-ready deployment strategies, equipping you to build real-world AI solutions.

Keyfeatures

- Comprehensive coverage from fundamentals to advanced generative AI concepts

- Hands-on experience with prompt crafting techniques to elicit precise LLM responses

- Exploration of advanced prompting strategies like zero-shot, few-shot, and chain-of-thought prompting

- Training on retrieval-augmented generation (RAG) and vector database integration

- Practical usage of key tools and libraries: Python, PyTorch, LangChain, OpenAI API, and more

- Understanding of LLMOps principles for deploying and managing LLM applications

- Insights into ethical considerations, including bias and misinformation in prompt design

- Application-focused learning across diverse domains such as content creation, code generation, and data analysis

Who should take this LLM prompt engineering certification course?

If you are an AI enthusiast, developer, or professional working with natural language processing, AI product development, or automation and you want to get better at designing effective prompts to make your AI applications smarter, the Prompt Engineering with LLM Course is a great fit for you.

What are the prerequisites for this course?

To succeed in this course, you should have a basic understanding of Python, machine learning, deep learning, natural language processing, generative AI, and prompt engineering concepts. However, you will receive self-learning refresher materials on generative AI and prompt engineering before the live classes begin.

What are the system requirements for the LLM Prompt Engineering course?

The system requirements for this Prompt Engineering with LLM Course include:

- A laptop or desktop computer with a minimum of 8 GB RAM with Intel Core-i3 and above processor to run NLP and machine learning models is required.

- A stable and high-speed internet connection is necessary for accessing online course materials, videos, and software.

How do I execute the practicals in this course?

Practical for this Prompt Engineering course are done using Python, VS Code, and Jupyter Notebook. You will get a detailed step-by-step installation guide in the LMS to set up your environment smoothly. Additionaly, Edureka’s Support Team is available 24/7 to help with any questions or technical issues during your practical sessions.

Prompt Engineering with LLM Course Projects

Prompt Engineering with LLM Course Certification

Upon successful completion of the Prompt Engineering with LLM Course, Edureka provides the course completion certificate, which is valid for a lifetime.

To unlock Edureka’s Prompt Engineering with LLM course completion certificate, you need to fully participate in the course by completing all the modules and successfully finish the quizzes and hands-on projects included in the curriculum.

The Prompt Engineering with LLM certification can be tough if you’re new to the field,it covers a lot, from understanding how large language models work to actually crafting prompts and building projects.

Yes, once you complete the certification, you will have lifetime access to the course materials. You can revisit the course content anytime, even after completing the certification.

John Doe

Title

with Grade X

XYZ123431st Jul 2024

The Certificate ID can be verified at www.edureka.co/verify to check the authenticity of this certificate

Zoom-in

reviews

Read learner testimonials

Hear from our learners

FAQs

What is LLM?

Large Language Model (LLM) is an AI trained on huge text data to understand and generate human-like language.

What is prompt engineering in LLM?

It's the art of crafting inputs (prompts) that guide large language models (LLMs) to give accurate, useful responses.

Why should I learn LLM?

Learning LLMs lets you build advanced AI apps like chatbots and content tools shaping the future of tech.

What are examples of LLMs?

Examples include OpenAI’s GPT series (ChatGPT, GPT-4), Google’s BERT,powerful models for language tasks.

What if I miss a live class of this training course?

You will have access to the recorded sessions that you can review at your convenience.

What if I have queries after I complete the course?

You can reach out to Edureka’s support team for any queries and you’ll have access to the community forums for ongoing help.

What skills will I acquire upon completing the Prompt Engineering with LLM training course?

Upon completing the Prompt Engineering with LLM training, you will acquire skills in prompt structuring, prompt tuning, task-specific prompting, and model behavior analysis.

Who are the instructors for the LLM Prompt Engineering Course?

All the instructors at edureka are practitioners from the Industry with minimum 10-12 yrs of relevant IT experience. They are subject matter experts and are trained by edureka for providing an awesome learning experience to the participants.

What is the cost of a prompt engineering course?

The price of the course is 18,999 INR.

What is the salary of a prompt engineer fresher?

According to Glassdoor, Freshers in India typically start around ₹₹6 to ₹7 LPA, while in the US it can range from $70,000 to $100,000 annually.

Will I get placement assistance after completing this Prompt Engineering with LLM training?

Edureka provides placement assistance by connecting you with potential employers and helping with resume building and interview preparation

How soon after signing up would I get access to the learning content?

Once you sign up, you will get immediate access to the course materials and resources.

Is the course material accessible to the students even after the Prompt Engineering with LLM training is over?

Yes, you will have lifetime access to the course material and resources, including updates.

Is there a demand for prompt engineering?

Yes, prompt engineers are currently in high demand. With the rapid growth of AI adoption, companies are actively seeking professionals skilled in prompt design.

Is prompt engineering the future?

Its future is more about growing and adapting than becoming outdated.

Have more questions?

Course counsellors are available 24x7

For Career Assistance :